Health gadgets continue to evolve in many forms and shapes – from something that fits in your pocket to something that is wearable or walkable. Everyday objects are turning into “Smart objects”, building the foundation for the next version of the Internet. And it’s not all smoke and mirrors. So let’s talk about mirrors.

Health gadgets continue to evolve in many forms and shapes – from something that fits in your pocket to something that is wearable or walkable. Everyday objects are turning into “Smart objects”, building the foundation for the next version of the Internet. And it’s not all smoke and mirrors. So let’s talk about mirrors.

Fairy tales and science fiction stories often pave the way to real world technology. Magic mirrors have been used in Snow White and Harry Potter’s world. Now you can get one, too – manufactured by a Hong Kong company James Law Cybertecture International.

Cybertecture mirror can tell you about the weather or your last weight readings reported by the scales. It can show you a TV channel, let you browse Facebook or twitter and help you to exercise. Impressive, yet so much more is yet to come.

Could a mirror tell us how healthy we are? For example, could it measure our heart rate at a distance? Sure, it could. And it has already been demonstrated as a concept prototype (Cardiocam, MIT media labs, Poh et al. 2010), although the designer is now focusing on mobile devices (check his company

Cardiio).

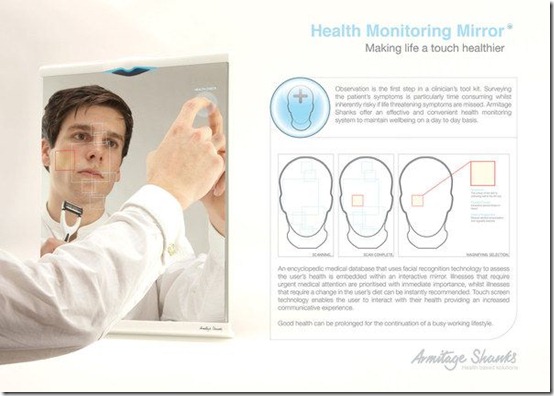

What other health metrics could be performed by the mirror during your regular morning hygiene routine? If a camera can measure minute changes in the color of your face to determine your heart rate, it could also measure your facial expressions and emotions or perform observational analysis – the first of four methods of diagnosis performed by traditional Chinese medicine.

Prototypes for computerized facial diagnostic systems already have been developed. One recent study, for example, (Li et al 2012) analyzes lips. The software segments lips from the rest of the face and extracts color, texture and shape features. Special supervised learning algorithms are then able to classify lips as deep-red, purple, red or pale and make inferences related to energy levels and circulation.

Health management applications will not be limited to smartphones or smart homes. All objects in our lives will gradually become “smarter.” Mobile phones can already manage vacuum cleaners and thermostats. Refrigerators can tweet, check Google calendars, download recipes, play tunes and alert us about food spoilage. Mirrors can monitor our weight and exercise.

There is still more emphasis these days on technological wizardry than on actual benefits, but data collected through different channels can be brought together for analysis and context.

Aurametrix is a new software product that helps to identify the subtle cause-effect relationships that contribute to health, either positively or negatively. It can find common ground to make connections between specific symptoms and weather, air quality, food, weight, heart rate, exercise, work-related and personal care activities – and generate suggestions on what could be affecting the symptoms, and in which amounts and combinations. Systems like Aurametrix could eventually integrate our observations with data coming from smart objects surrounding us and then generate valuable insights. And perhaps, one day, we won’t regard the mirror on the wall nagging us about losing weight or commenting on the bags under our eyes as invasion of privacy. Let’s build the future piece by piece – and they will come. REFERENCES

Poh MZ, McDuff DJ, & Picard RW (2010). Non-contact, automated cardiac pulse measurements using video imaging and blind source separation. Optics express, 18 (10), 10762-74 PMID: 20588929Li F, Zhao C, Xia Z, Wang Y, Zhou X, & Li GZ (2012). Computer-assisted lip diagnosis on traditional Chinese medicine using multi-class support vector machines. BMC complementary and alternative medicine, 12 (1) PMID: 22898352

Littlewort, G., Whitehill, J., Wu, T., Fasel, I.R., Frank, M., Movellan, J.R., Bartlett, M.S. (2011) The Computer Expression Recognition Toolbox (CERT). Proceedings of the 9th IEEE Conference on Automatic Face and Gesture Recognition.